A deep-learning system for isolating individual instruments from orchestral recordings.

One of the objectives of the REPERTORIUM project is to achieve sound source separation in classical music recordings — that is, isolating each instrument from an orchestral performance.

To reach this goal, the team is developing novel deep-learning models and dedicated datasets for training and evaluation.

A central part of this effort involves creating both real and synthetic datasets of orchestral music, enabling robust machine learning models capable of separating and enhancing individual instrument tracks from complex audio mixtures.

Real Recordings Dataset

At REPERTORIUM, Mozart’s Symphony No. 40 in G minor and Tchaikovsky’s Romeo and Juliet were recorded at The Spheres Recording Studio — not in the traditional orchestral setup, but by recording each instrument individually. This unique dataset offers around one hour of authentic studio performances, providing perfect ground truth references for source separation research.

Synthetic Dataset: SynthSOD

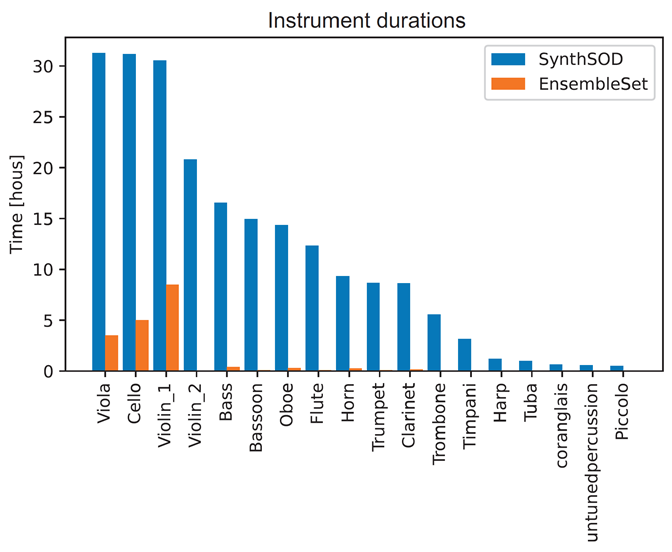

The resulting dataset, called SynthSOD [1], contains more than 47 hours of classical music with separation references for most of the instruments that we can expect to find in a symphonic orchestra.

Score-informed Source Separation

Since the model is not taking the audio at its input, models trained with synthetic audio can obtain good results when applied to real recordings [2]. Following this approach, we have been able to obtain some of the best separation results for small ensembles to date:

Instrument Deblending for Production

However, the signals of these close microphones do not contain only the sound of their closer instrument, but also a large amount of interference (also known as bleeding) from the other instruments.

At REPERTORIUM, we are currently developing new deep-learning models to eliminate this bleeding, making the work easier for audio engineers working on the production of classical music recordings.

Audio Engineering

Improved mixing and mastering workflows through automatic instrument deblending and noise reduction.

Music Information Retrieval

Enables precise analysis of orchestration, performance dynamics, and timbral structure.

Dataset Contribution

Public release of both real and synthetic datasets will provide essential benchmarks for future research in source separation.

in IEEE Open Journal of Signal Processing, vol. 6, pp. 129-137, 2025

J. Garcia-Martinez, D. Diaz-Guerra, A. Politis, T. Virtanen, J. J. Carabias-Orti and P. Vera-Candeas

2025 33rd European Signal Processing Conference (EUSIPCO), Palermo, Italy, 2025

Eetu Tunturi, David Diaz-Guerra, Archontis Politis, Tuomas Virtanen